A UI Testing Framework consists of a set of tools, libraries, methods, and coding practices organized to perform automation tests on the User Interface (UI) of the application and give clear and concise reports. As QA engineers, we can use this framework to verify that the application’s user interface behaves as expected, ensuring functionality, usability, and a consistent user experience.

The framework must be reliable, and maintainable, the tests must be readable, and maintainable, and the reports are correct, clear, and easy to understand. To achieve this, we need to follow some best practices to help us create such a framework. We will cover those practices in this blog post.

How to Design and Structure the Framework?

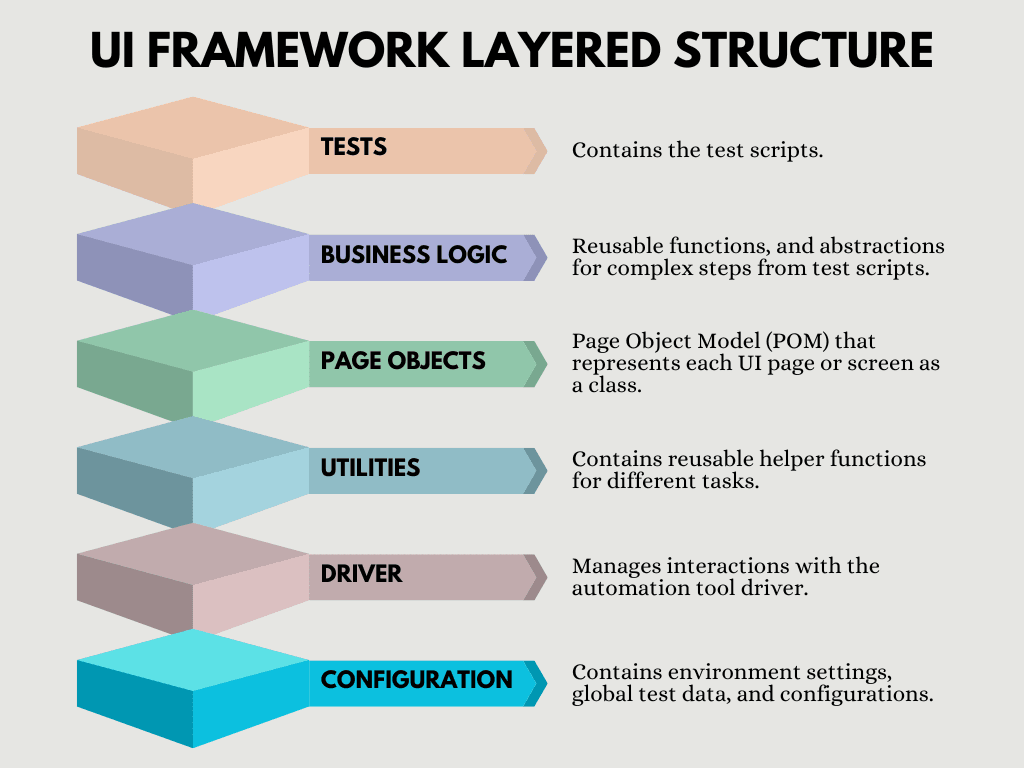

It is a very good practice to create a layered structure. With this approach, you can organize the components in a way where every layer will be responsible for different usage. This will make the framework to be more scalable, readable, maintainable, and flexible.

In the picture above you can see one example of a UI Framework with a Layered Structure. As you can see, each layer is responsible for a different thing inside the framework.

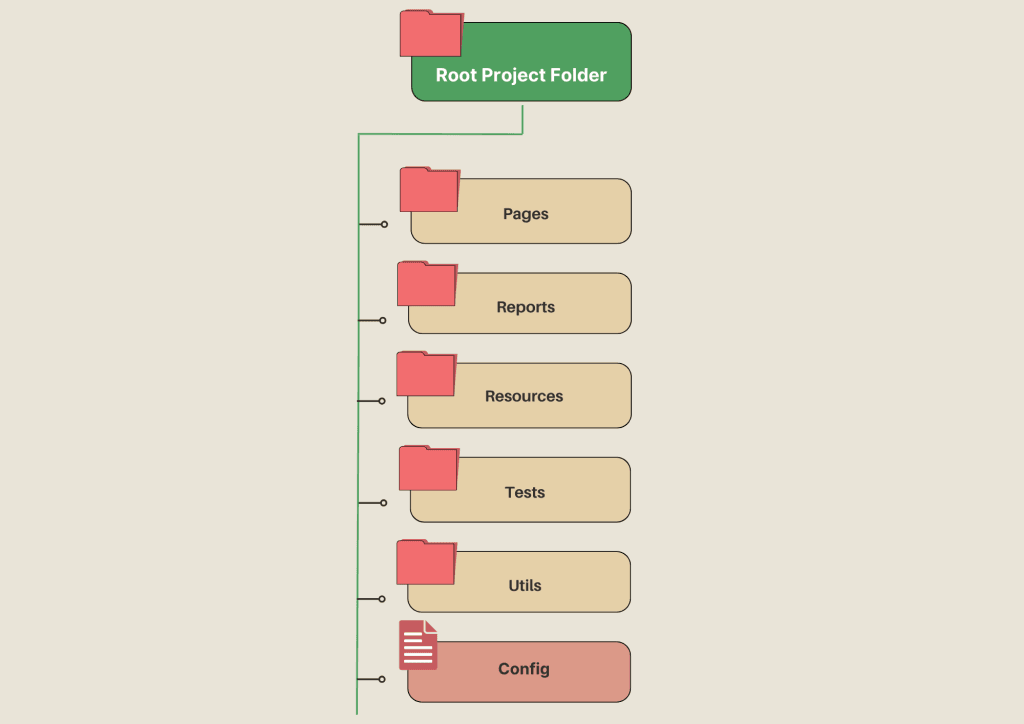

There are a lot of different ways you can organize your files and folders inside your framework, but here is one example of how you can do that by following the Layered Structure:

In the example from above, we have a couple of folders:

- Pages: Folder where you have the Page Object files, like HomePage.js, ProductPage.js, ShoppingCart.js, etc. Inside these files, you can store the UI elements (locators) and their interactions (methods) for each page.

- Reports: Folder where you are going to place the report files that will be generated when the tests are executed. You can install and configure some HTML Reporter that will generate reports based on your needs and that will store them in an HTML or JSON format in this folder.

- Resources: This folder contains all the resources that you are going to use in your tests, like test data files, or any other assets that are used for test execution.

- Tests: Contains all the test script files.

- Utils: This folder contains all the helper functions for tasks like logging, handling configuration, or interacting with APIs. Here you can also put the methods for handling setup and teardown of browser or device sessions.

- Config: In these config files you can store environment settings, global test data, and other configurations. For example, capabilities, URLs, browser types, environments, user credentials, etc.

You can organize the framework based on your needs, this is just an example how we can organize the framework by using the Layered Structure.

Also, make sure that all the methods that you are using have descriptive names, and try to avoid duplicating code across multiple tests. Organize the methods and actions in a way that you can reuse them in multiple tests.

How to Choose and Define the Locators?

There are a lot of different attributes by which you can locate the elements on the page, but make sure that you always choose stable and unique locators, like ID, or name for example. Avoid using locators that may change dynamically when opening or when you are making some actions on the page.

It is also a very good practice to ask the developers to define attributes that will be used for the automation tests and that will be unique for each element. For example, for each element define attribute “aria-label” that will be unique, and find all the elements by using that attribute.

And like we said before, define the locators in the Page Object class that corresponds to the page where the element is located.

How to Wait and Handle the Elements?

When you are interacting with the app, there will be scenarios where you will have to wait for the page to be loaded, for the element to become visible, clickable, etc. For these kind of scenarios use explicit waits, where you will wait until the elements becomes visible, clickable, enabled, etc. Don’t use implicit waits where you will hardcode the waiting time. By hardcoding the waiting time, sometimes you will slow down your test unnecessary, and sometimes the time that you will hardcode will not be enough. That’s why it is not a good idea to use implicit waits. By following these practices you will avoid having flaky tests.

How to Organize the Test Data?

Like we said before in the Layered Structured, it is a good practice to separate the test data from test logic. You can organize your test data in CSV, XML, or JSON files where you will store user credentials, test inputs, API payloads, complex configurations, environment-specific settings, etc.

With this approach, you’ll maintain a clean, scalable, and reliable test data structure that simplifies testing and supports long-term maintenance.

How to Handle the Errors?

It is a very good practice error handling mechanisms that will clearly show the errors and the reason why the test is failing.

One good practice is to use Try-Catch Blocks to handle unexpected errors in specific parts of your test scripts.

Also, use clear assertions to validate expected outcomes, and handle failures with appropriate error messages.

assert.strictEqual(actualTitle, expectedTitle, "Page title does not match!");Log errors with detailed context, including the test step, data used, and the application state at the time of failure.

throw new Error(`Login failed for user ${username} on page ${pageUrl}`);How to Create Test Cases That Will be Maintainable?

Before we said that all the test files will be stored in one ‘Tests’ folder, but those test files needs to be organized in a way that will be easy to maintain, debug, and read. You can group the test files by feature, functionality, or module. Use folders or tags to keep them organized.

Also, don’t name your test files as:

test1.spec.js

test2.spec.js

...Give some good, short, but descriptive names to your test files that will describe the test case.

This is also a very good practice overall, not just for the test files. Use descriptive names for all the functions, locators, configurations, test data files, etc., to make the framework readable and maintainable.

Write test cases that are small, that are covering only one user flow, and that are not depending from one another.

How to Report the Test Results?

After you execute the test cases, it is a very good practice to generate a HTML report, where you will show which test cases are executed, how many of them pass, how many have failed, why they failed, you can show the percentage of passed and failed test, etc. Showing this results in a readable way, with percentages, graphs, and by organizing it this way can help a lot to all the stakeholder to be updated about the test results.

For this purpose, you can install some HTML Reporter like:

- Mochawesome, which is a popular HTML and JSON reporter for Mocha test framework.

- Jasmine HTML Reporter, a built-in or plugin-based HTML reporter for Jasmine tests.

- ExtentReports, HTML reporting library supporting Selenium, Appium, and other automation tools.

- …

Version Control, CI/CD

It is a very good practice to store the automation tests in a version control system, like Git. This brings a lot of benefits, like allowing collaboration (Multiple people can work on the same codebase without conflicts.), rollbacks (code is stored securely and can be restored if needed), and branch management.

Also, we can include the automation tests in the CI/CD pipeline where the tests can be triggered as part of the app build process. They will be executed automatically in the pipeline to validate new builds. With this approach, we can detect the issues quicker, and the new build will not be deployed to the environment until all the test pass.

Share the Knowledge

It is a very good practice to write a README file or some Wiki page about the automation framework. Write all the requirements that needs to be fulfilled to use the framework, how to set it up on new machine, how to run the tests, how the files are organized, etc.

Also, don’t forget to update this README file or Wiki page.

You can organize a meeting or prepare a presentation where you will share the knowledge about the framework with the whole team.

Maintenance

Creating the automation tests is just a beginning. Make sure that you maintain the existing tests, update them when there is a change in the app, add new tests with new scenarios, identify if there is any flaky tests. Make a good analysis of the flaky tests and fix them to give correct results every time. Also, don’t forget to update and add new written (textual) test cases.

Here is a checklist that you can follow when you are creating your UI Automation Framework:

Checklist

- Define the Layered Framework Structure.

- Define the Locators in a separate Page Object Classes. Use unique locators.

- Notify the developers if there is a need for adding some missing locators.

- Organize the Test Data in separate files.

- Implement Error Handling Mechanisms.

- Define Maintainable Test Cases.

- Install and Configure HTML Reporter.

- Use Version Control.

- Include the tests in the CI/CD Pipeline.

- Write a README file or a Wiki page.

- Share the knowledge with the rest of the team.

Thanks, I’ve been looking for this for a long time

Very nice post. I just stumbled upon your weblog and wanted to say that I’ve truly enjoyed surfing around your blog posts. After all I will be subscribing to your feed and I hope you write again very soon!

New Member Introduction – Happy to Join the Community

New Member Introduction – Happy to Join the Community

New Member Introduction – Happy to Join the Community

New Member Introduction – Happy to Join the Community